Software

Mashed up: The Matrix - Reloaded

Mon, 07/05/2007 - 18:38 — balintThe following snippet of the Burley Brawl from The Matrix: Reloaded has been passed through my hacked version of libavcodec to reverse the direction of each motion vector:

libavcodec & ffdshow

Mon, 07/05/2007 - 18:34 — balint

To perform these experiments, I needed to be able to tweak the source code of a video decompressor. Since I do most of my development on the Windows platform using Visual Studio and I wanted to support as many codecs that use motion compensation, my options became limited. libavcodec in ffmpeg is the library to encode and decode any of a host of video and audio codecs. There is one catch however: it is written in C using C99 features and VC does not compile C99. Therefore I (back-)ported libavcodec to the Windows platform so I could use a GUI debugger and quickly learn the structure of the code. This would allow me to make quick changes and evaluate the results.

Motion Vector Experiments

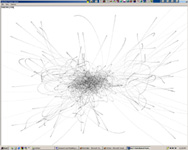

Mon, 07/05/2007 - 18:28 — balintThe use of motion vectors for motion compensation in video compression is ingenious - another testament to how amazing compression algorithms are. I thought it would be an interesting experiment to get into the guts of video decoder and attempt to distort the decoded motion vectors before they are actually used to move the macro-blocks (i.e. before they affect the final output frame). My motivation - more creative in nature - was to see what kinds of images would result from different types of mathematical distortion. The process would also help me better understand the lowest levels of video coding.

In the end I discovered the most unusual effects could be produced by reversing the motion vectors (multiplying their x & y components by -1). The stills and videos shown here were created using this technique. Another test I performed was forcing them all to zero (effectively turning off the motion compensation) but the images were not as 'compelling'.

Here are some stills with Neo and The Oracle conversing from The Matrix: Reloaded after the video's motion vectors have been distorted by my hacked version of libavcodec:

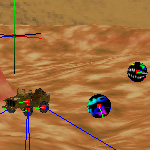

DS (AKA Driving Simulator)

Mon, 07/05/2007 - 17:37 — balintThis is "THE Game Mk II"! concocted with my 'partner in crime' Xianhang "Hang" Zhang. There's ~20k lines of code after I coded for about 2 weeks straight.

|

|

|

|

Humble Beginnings (AKA THE Game)

Mon, 07/05/2007 - 17:23 — balintFor the tutorials of the second-session-first-year C course, I joined the advanced tutorial where we decided our end-of-term goal would be to create a little networked game. hat high hopes we had... (And I still haven't taken down the message board.)

Presentation Day

Mon, 07/05/2007 - 13:57 — balintThis is the presentation we gave to demonstrate Teh Engine during the final Computer Graphics lecture in front of a full lecture hall of ~300 students.

(Thanks Ashley "Mac-man" Butterworth for operating the camera.) Please excuse my 'ah's and 'um's - it happens when I'm really tired. Was up for >48 hours.

It should be noted that I fixed the physics so the car behaves properly. Please read the previous page for more detail about the engine.

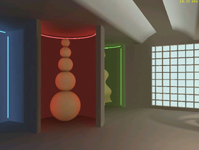

Teh Engine

Mon, 07/05/2007 - 13:50 — balintTeh Engine is a graphics engine I wrote in C++ using OpenGL. I boasts many features but can also be considered as re-inventing the wheel. However I believe writing such a complex piece of software is a 'rite of passage' for anyone seriously interested in computer graphics and creating well designed code.

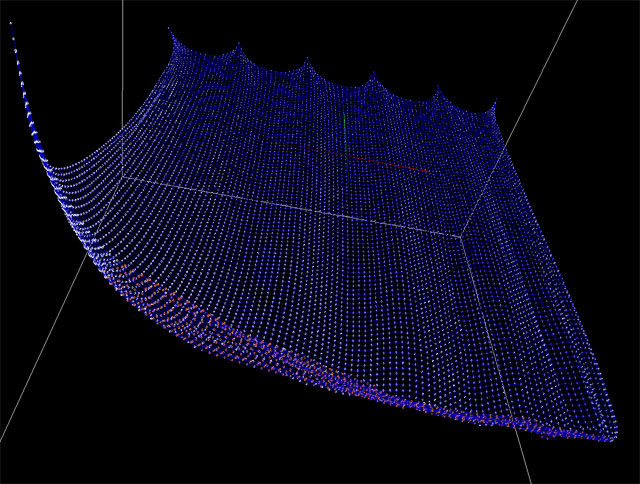

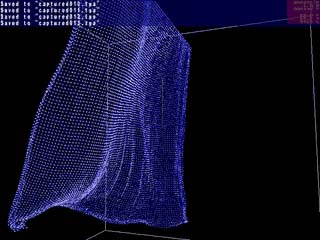

Cloth

Mon, 07/05/2007 - 12:14 — balintBuilding on the foundations of the particle simulation, my friend Dom De Re and I decided to dable in the creation of cloth. The parameters are highly tunable and make for interesting results and 'explosions'.

Having come to rest under gravity (the orange particles at the bottom indicate a collision with the floor):

Graphical Enhancements

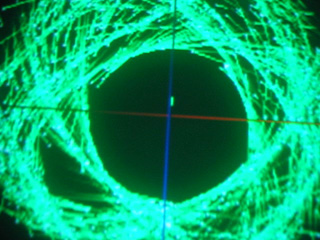

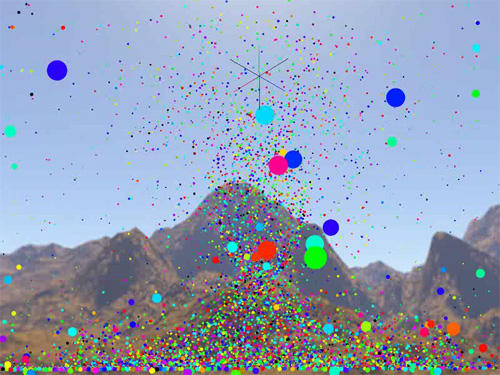

Mon, 07/05/2007 - 11:56 — balintAlthough I like the basic particle-line aesthetic presented in the previous pictures and videos, I felt it time to add a bit of colour, texture and lighting to the simulation.

Here you can see me tearing my face up in a lit environment:

I re-enabled the skybox in Teh Engine and used an image of the full-saturation hue wheel to give the particles a 'random' colour:

(I think this is reminiscent of the Sony Bravia LCD TV ad!)

Torn Cloth Tornado

Mon, 07/05/2007 - 11:48 — balintHere is a newer video of tearing the cloth and enabling the tornado simulation after reducing the cloth to small fragments:

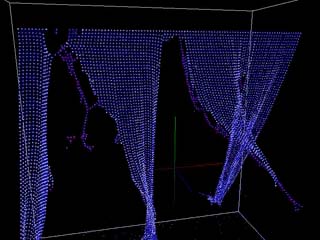

Tearable Cloth

Mon, 07/05/2007 - 10:38 — balintTo make the simulation even more fun I made it possible to tear the cloth by shooting a red bullet at the grid. The red sphere is pulled downward under the influence of gravity and breaks any constraints within its radius.

The early videos follow - click to download them. The later (and much better) videos can be found throughout the following pages.

Tearing the cloth |

Colouring the cloth |

Running the tornado with connected particles |

Blowing in the wind |

External view of tearing the cloth |

Zoom from view of the tornado to another |

Tornado #1: Initial Implementation

Mon, 07/05/2007 - 10:33 — balintThis video demonstrates the tornado in action:

Cosmology Major Project

Sat, 05/05/2007 - 13:46 — balintA system for distributed extensible particle simulations over multiple computers. Unfortunately I haven't exactly got around to distributing it. Although thanks to the generosity of Steven Foster, the many lab computers at my school are waiting. My report details the process and simulation design.

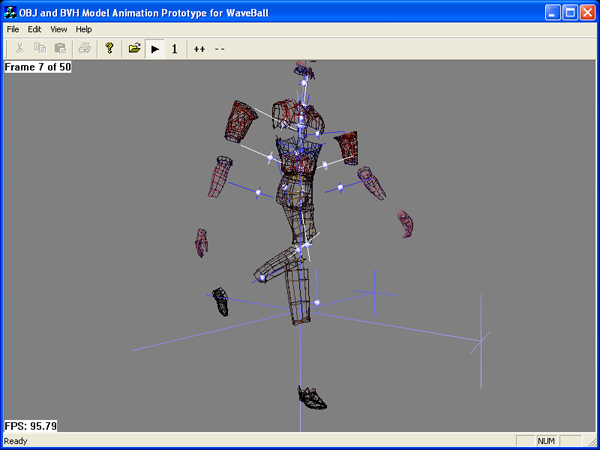

Walker

Sat, 05/05/2007 - 12:36 — balintI wanted to try some skeletal animation so I wrote this test program.

It imports meshes, materials and animations from Poser and plays them back.

An exploded figure walking:

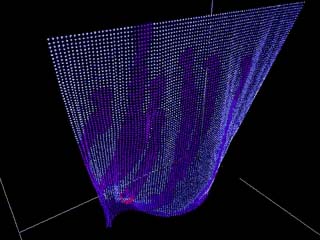

Verlet-integration based particle simulations

Fri, 04/05/2007 - 17:18 — balintI have been continually developing a Verlet-integration based particle system inside Teh Engine and have produced a number of interesting results. The two main themes of simulated phenomenon are tornados and cloth. You can read more about these individual experiments in the next sections, as well as watching videos of the results.

An excellent resource for Verlet-integration can be found at Gamasutra.

Here are some stills:

WebRadio

Fri, 04/05/2007 - 14:20 — balintSKIP THE CHIT-CHAT, LET ME USE IT*!

* Please note: WebRadio is only available when I have the computers and radios switched on. (And I don't usually do this as electricity does not grow on trees and fire is bad. Did I mention I have to pay for uploads too?) If it says it "Can't connect to the server" and you'd like to give it a whirl, please do not hesitate to email me (bottom of front page) and I'll switch it on for you.

TehDetector

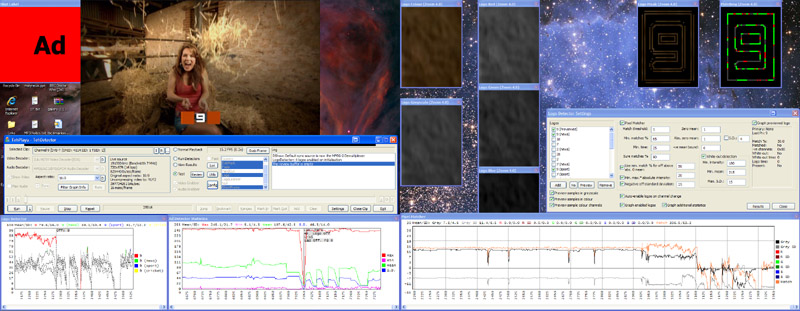

Fri, 04/05/2007 - 14:06 — balintI wrote a Windows-based video analysis and processing framework to underpin the research I undertook for my undergraduate thesis.

Some of the features it boasts:

- DirectShow-based: Enables analysis and process of any video/audio format that DShow supports.

- Seeking with frame-accuracy in an MPEG-2 stream: This handy feature I implemented, when used with certain filters, enables frame-accurate seeking (which otherwise is not possible with the standard filter graph (i.e. source filter and demultiplexer).

- Input live streaming TV: I use a Terrestrial Digital Video Broadcasting card to feed Teh Detector live video, which it processes in real-time. It (obviously) also supports offline analysis of stored content.

- Extensible detector architecture: Detectors (analysis components) can be written in C++ and added at will to the framework to extend its capabilities. I implemented several detectors in a hierarchy fashion to find television commercials in a live stream.

- Automatic frame caching: The framework automatically caches video frames and optimises analysis during runtime by also caching various calculations performed on an incoming frame.

- Event store/viewer: Detectors can output 'events' that describe a detected feature in the video, which is automatically stored and managed by the framework. These events can then later be reviewed in an intuitive manner.

- Lots of others: detector timing profiler, signal strength meter, playback rate control, filter graph management, bookmarking, frame dumping, extensive keyboard shortcuts...

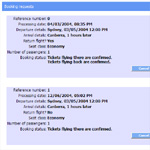

Real-time detection of commercials on television

Fri, 04/05/2007 - 12:35 — balintFor my undergraduate honours thesis I conducted research into the unbuffered real-time detection of commercials on television with a view to muting the volume when ads are being broadcast. The research itself dealt with examining the features required to enable robust real-time detection. I developed a sophisticated video analysis and processing framework to underpin experimentation and compilation of results.

The following screenshots show the system running live (click on one to see the full-res image):

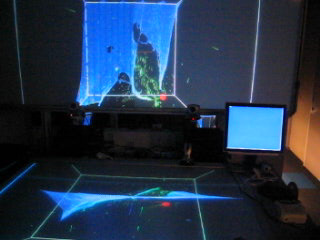

TVisionarium Mk II (AKA Project T_Visionarium)

Tue, 01/05/2007 - 16:13 — balintAn inside view into iCinema's Project T_Visionarium:

(We tend to drop the underscore though, so it's referred to as TVisionarium or simply TVis).

This page summarises (for the moment below the video) the contribution I made to TVisionarium Mk II,

an immersive eye-popping stereo 3D interactive 360-degree experience where a user can

search through a vast database of television shows and rearrange their shots in

the virtual space that surrounds them to explore intuitively their semantic similarities and differences.