T_Visionarium (AKA Project TVisionarium Mk II)

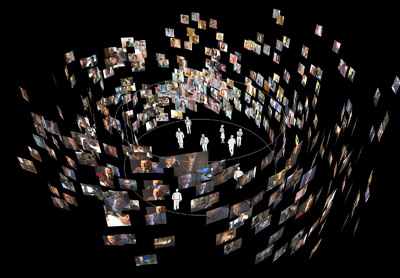

This series of pages summarises the contribution I made to TVisionarium Mk II, an immersive eye-popping stereo 3D interactive 360-degree experience where a user can search through a vast database of television shows and rearrange their shots in the virtual space that surrounds them to explore intuitively their semantic similarities and differences.

It is a research project undertaken by iCinema, The iCinema Centre for Interactive Cinema Research at the University of New South Wales (my former uni) directed by Professor Jeffrey Shaw and Dr Dennis Del Favero. More information about the project itself, Mk I and the infrastructure used, is available online.

I was contracted by iCinema to develop several core system components during an intense one month period before the launch in September of 2006. My responsibilities included writing the distributed MPEG-2 video streaming engine that enables efficient clustered playback of the shots, a distributed communications library, the spatial layout algorithm that positions the shots on the 360-degree screen and various other video processing utilities. The most complex component was the video engine, which I engineered from scratch to meet very demanding requirements (more details are available on the next page).

Luckily I had the pleasure of working alongside some wonderfully talented people: in particular Matt McGinity (3D graphics/VR guru), as well as Jared Berghold, Ardrian Hardjono and Tim Kreger.