Graphics

My Contribution

Thu, 10/01/2008 - 12:33 — balintI wrote the following major system components:

- 1) A multi-threaded, load balancing MPEG-2 video decoder engine, featuring:

- Automatic memory management & caching

- Level-Of-Detail support and seamless transitions

- Continuous playback or shot looping (given cut information)

- Asynchronous loading and destruction

[Initial tests indicate it can play over 500 videos simultaneously on one computer (with 2 HT CPUs and 1GB of RAM at the lowest LOD). TVisionarium is capable of displaying a couple of hundred videos without any significant degradation in performance, but there's so much still to optimise that I would be surprised if it couldn't handle in excess of 1000.]

With my latest optimisations, TVisionarium is able to play back 1000 shots simultaneously!

While profiling the system, total CPU usage averages around 90-95% on a quad-core render node!

This indicates that those optimisations have drastically minimised lock contention and support far more fluid rendering.

Have a look at TVis in the following video:

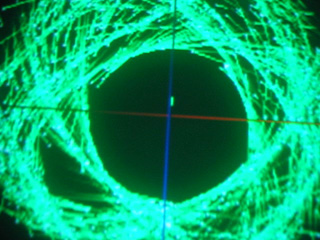

This is an in-development 'video tube' test of the video engine:

(Watch it on youtube.com to leave comments/rate it if you like.)

Video Behind the Scenes

Thu, 10/01/2008 - 12:22 — balint1000 videos can be seen playing back simultaneously!

This is a preview video produced by iCinema:

Events

Thu, 10/01/2008 - 12:07 — balintT_Visionarium was officially launched on 08/01/2006 as part of the 2008 Sydney Festival. Please read my blog post about it. Here are some pictures:

The festival banner:

Crowd before the speeches:

The digital maestros (Matt McGinity & I):

Appearances in TV News

Thu, 10/01/2008 - 11:40 — balint09/01/2007 - SBS World News:

August 2006 - Channel Nine News:

T_Visionarium (AKA Project TVisionarium Mk II)

Thu, 10/01/2008 - 11:35 — balintThis series of pages summarises the contribution I made to TVisionarium Mk II, an immersive eye-popping stereo 3D interactive 360-degree experience where a user can search through a vast database of television shows and rearrange their shots in the virtual space that surrounds them to explore intuitively their semantic similarities and differences.

It is a research project undertaken by iCinema, The iCinema Centre for Interactive Cinema Research at the University of New South Wales (my former uni) directed by Professor Jeffrey Shaw and Dr Dennis Del Favero. More information about the project itself, Mk I and the infrastructure used, is available online.

I was contracted by iCinema to develop several core system components during an intense one month period before the launch in September of 2006. My responsibilities included writing the distributed MPEG-2 video streaming engine that enables efficient clustered playback of the shots, a distributed communications library, the spatial layout algorithm that positions the shots on the 360-degree screen and various other video processing utilities. The most complex component was the video engine, which I engineered from scratch to meet very demanding requirements (more details are available on the next page).

Luckily I had the pleasure of working alongside some wonderfully talented people: in particular Matt McGinity (3D graphics/VR guru), as well as Jared Berghold, Ardrian Hardjono and Tim Kreger.

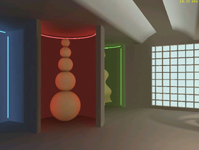

Correctly lit, textured cloth: Torn Up

Sat, 06/10/2007 - 16:20 — balintI fixed the lighting calculations and thought I would use a built-in texture:

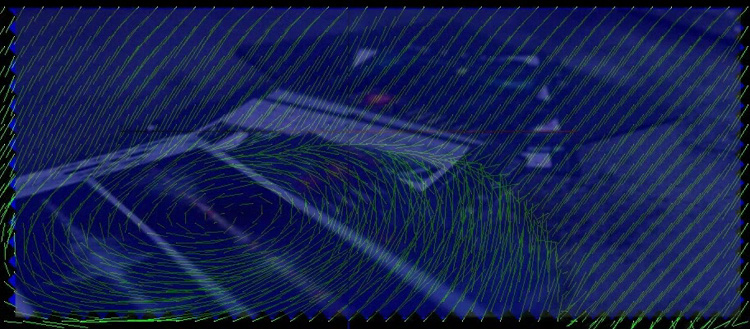

Paused Tornado Simulation Fly-thru

Sat, 06/10/2007 - 16:16 — balintHere is a fly-through of the standard tornado simulation with some pretty filaments:

DS with Correct Physics!

Sat, 06/10/2007 - 16:08 — balintShortly after the presentation day, I ripped out the original physics code that someone (who shall not be mentioned!) had written in the minutes prior to the presentation and replaced it with more 'physically correct' code:

Genetic Programming: 3D Visualisation in Python

Mon, 30/07/2007 - 17:36 — balintThis is a GUI frontend to the genetic programming assignment given in this subject. The aim is to evolve a wall-following robot. The program provides multiple visualisations of the process. It was written with Janice Leung - many thanks for the beautiful widgets! Developed on (but not for) Linux using Python and its bindings & add-ons: PyQt, PyOpenGL, PIL and psyco. README available. It contains more information about the code used to render the robot & world.

|

|

|

|

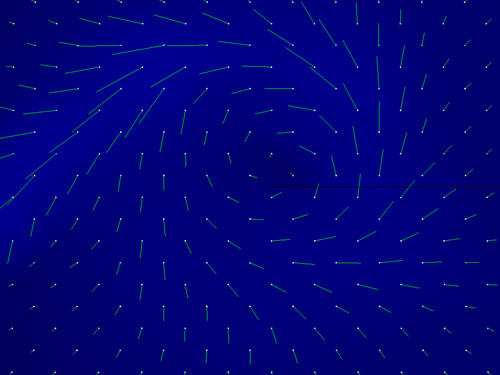

Motion Vector Visualisation

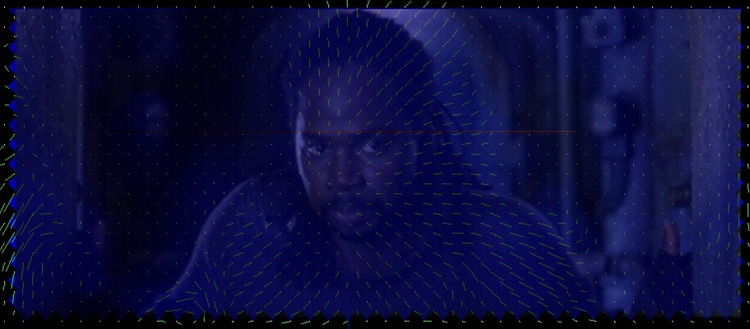

Mon, 07/05/2007 - 18:51 — balintUsing my modified version of ffdshow, which sends a video's motion vectors via UDP to an external application, I visualised the motion vectors from The Matrix: Reloaded inside my fluid simulation. The grid resolution is set based upon the macro-block resolution in the video sequence and each type-16x16 motion vector controls one spatially-matching point on the velocity grid. The following visualisation is taken from the scene where they are discussing the threat to Zion while inside the Matrix before Neo senses that agents are coming (followed by Smith) and tells the ships' crews to retreat.

The final video is on YouTube, with the MVs overlaid on top of the source video.

Blue Milk

Mon, 07/05/2007 - 18:50 — balintThis is the new-and-improved fluid simulation in action. I'm perturbing the 'blue milk' with my mouse. Watch for the darker region form and expand behind the point of perturbation. Due to finer resolution of the velocity grid, the linear artifacts apparent in the earlier version have disappeared and it now looks smooth in all directions.

2D Fluid Dynamics Simulation

Mon, 07/05/2007 - 18:49 — balintVelocity-grid-based 2D fluid simulation with effects that interestingly enough resemble Navier-Stokes simulations (well, a little anyway).

Motion Vector Experiments

Mon, 07/05/2007 - 18:28 — balintThe use of motion vectors for motion compensation in video compression is ingenious - another testament to how amazing compression algorithms are. I thought it would be an interesting experiment to get into the guts of video decoder and attempt to distort the decoded motion vectors before they are actually used to move the macro-blocks (i.e. before they affect the final output frame). My motivation - more creative in nature - was to see what kinds of images would result from different types of mathematical distortion. The process would also help me better understand the lowest levels of video coding.

In the end I discovered the most unusual effects could be produced by reversing the motion vectors (multiplying their x & y components by -1). The stills and videos shown here were created using this technique. Another test I performed was forcing them all to zero (effectively turning off the motion compensation) but the images were not as 'compelling'.

Here are some stills with Neo and The Oracle conversing from The Matrix: Reloaded after the video's motion vectors have been distorted by my hacked version of libavcodec:

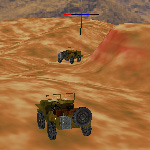

DS (AKA Driving Simulator)

Mon, 07/05/2007 - 17:37 — balintThis is "THE Game Mk II"! concocted with my 'partner in crime' Xianhang "Hang" Zhang. There's ~20k lines of code after I coded for about 2 weeks straight.

|

|

|

|

Humble Beginnings (AKA THE Game)

Mon, 07/05/2007 - 17:23 — balintFor the tutorials of the second-session-first-year C course, I joined the advanced tutorial where we decided our end-of-term goal would be to create a little networked game. hat high hopes we had... (And I still haven't taken down the message board.)

Presentation Day

Mon, 07/05/2007 - 13:57 — balintThis is the presentation we gave to demonstrate Teh Engine during the final Computer Graphics lecture in front of a full lecture hall of ~300 students.

(Thanks Ashley "Mac-man" Butterworth for operating the camera.) Please excuse my 'ah's and 'um's - it happens when I'm really tired. Was up for >48 hours.

It should be noted that I fixed the physics so the car behaves properly. Please read the previous page for more detail about the engine.

Teh Engine

Mon, 07/05/2007 - 13:50 — balintTeh Engine is a graphics engine I wrote in C++ using OpenGL. I boasts many features but can also be considered as re-inventing the wheel. However I believe writing such a complex piece of software is a 'rite of passage' for anyone seriously interested in computer graphics and creating well designed code.

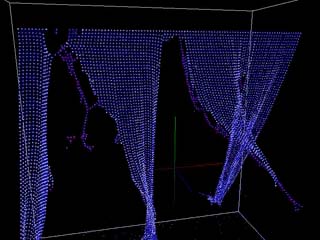

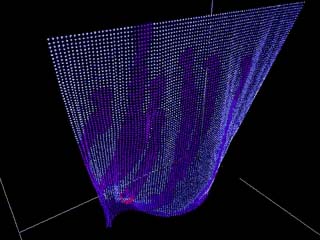

Cloth

Mon, 07/05/2007 - 12:14 — balintBuilding on the foundations of the particle simulation, my friend Dom De Re and I decided to dable in the creation of cloth. The parameters are highly tunable and make for interesting results and 'explosions'.

Having come to rest under gravity (the orange particles at the bottom indicate a collision with the floor):

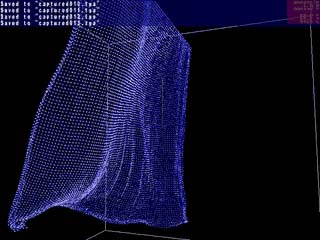

Graphical Enhancements

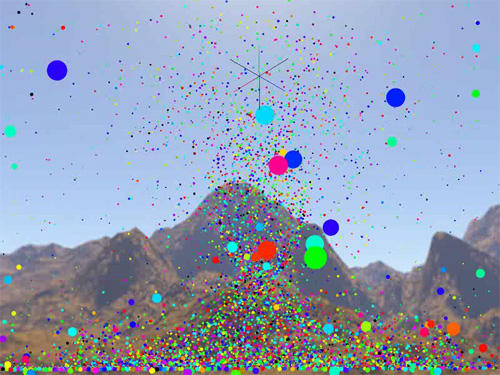

Mon, 07/05/2007 - 11:56 — balintAlthough I like the basic particle-line aesthetic presented in the previous pictures and videos, I felt it time to add a bit of colour, texture and lighting to the simulation.

Here you can see me tearing my face up in a lit environment:

I re-enabled the skybox in Teh Engine and used an image of the full-saturation hue wheel to give the particles a 'random' colour:

(I think this is reminiscent of the Sony Bravia LCD TV ad!)

Torn Cloth Tornado

Mon, 07/05/2007 - 11:48 — balintHere is a newer video of tearing the cloth and enabling the tornado simulation after reducing the cloth to small fragments:

Tearable Cloth

Mon, 07/05/2007 - 10:38 — balintTo make the simulation even more fun I made it possible to tear the cloth by shooting a red bullet at the grid. The red sphere is pulled downward under the influence of gravity and breaks any constraints within its radius.

The early videos follow - click to download them. The later (and much better) videos can be found throughout the following pages.

Tearing the cloth |

Colouring the cloth |

Running the tornado with connected particles |

Blowing in the wind |

External view of tearing the cloth |

Zoom from view of the tornado to another |

Tornado #1: Initial Implementation

Mon, 07/05/2007 - 10:33 — balintThis video demonstrates the tornado in action:

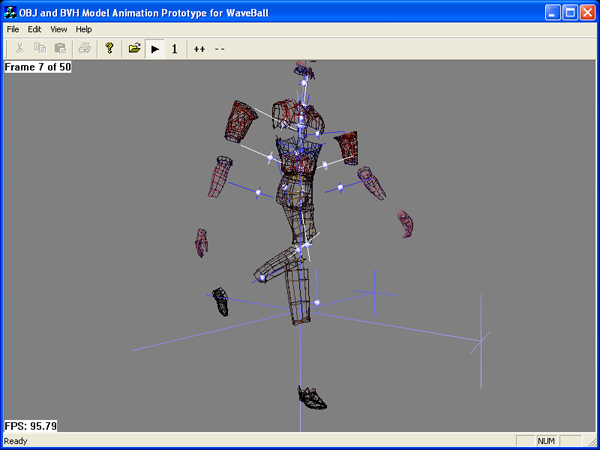

Walker

Sat, 05/05/2007 - 12:36 — balintI wanted to try some skeletal animation so I wrote this test program.

It imports meshes, materials and animations from Poser and plays them back.

An exploded figure walking:

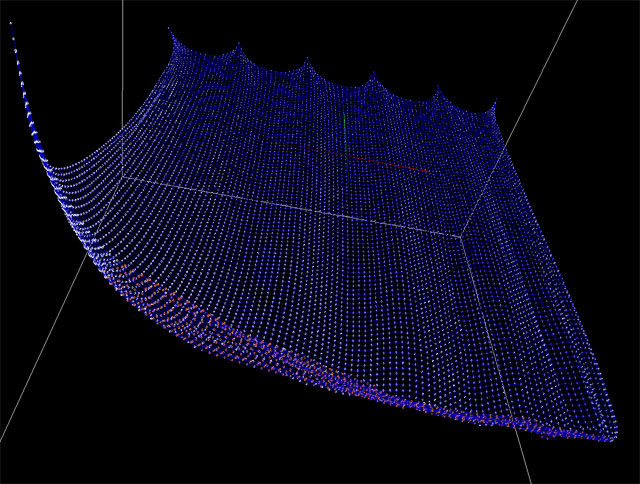

Verlet-integration based particle simulations

Fri, 04/05/2007 - 17:18 — balintI have been continually developing a Verlet-integration based particle system inside Teh Engine and have produced a number of interesting results. The two main themes of simulated phenomenon are tornados and cloth. You can read more about these individual experiments in the next sections, as well as watching videos of the results.

An excellent resource for Verlet-integration can be found at Gamasutra.

Here are some stills:

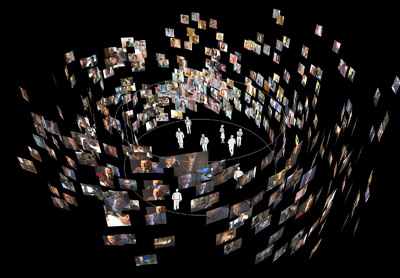

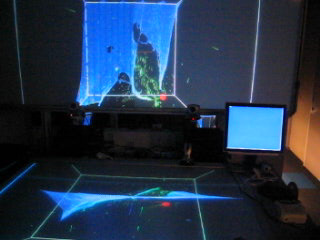

TVisionarium Mk II (AKA Project T_Visionarium)

Tue, 01/05/2007 - 16:13 — balintAn inside view into iCinema's Project T_Visionarium:

(We tend to drop the underscore though, so it's referred to as TVisionarium or simply TVis).

This page summarises (for the moment below the video) the contribution I made to TVisionarium Mk II,

an immersive eye-popping stereo 3D interactive 360-degree experience where a user can

search through a vast database of television shows and rearrange their shots in

the virtual space that surrounds them to explore intuitively their semantic similarities and differences.