Public appearance

Presentation at Dorkbot

Fri, 05/11/2010 - 23:39 — balintHere are some excerpts of the presentation Matt Robert and I gave at the October 2010 meetup of Dorkbot Sydney.

If you wish to see all of the photos from the set-up phase prior to the presentation on the roof of my apartment block, please have a look at the album in my gallery.

TehDetector

Fri, 04/05/2007 - 14:06 — balintI wrote a Windows-based video analysis and processing framework to underpin the research I undertook for my undergraduate thesis.

Some of the features it boasts:

- DirectShow-based: Enables analysis and process of any video/audio format that DShow supports.

- Seeking with frame-accuracy in an MPEG-2 stream: This handy feature I implemented, when used with certain filters, enables frame-accurate seeking (which otherwise is not possible with the standard filter graph (i.e. source filter and demultiplexer).

- Input live streaming TV: I use a Terrestrial Digital Video Broadcasting card to feed Teh Detector live video, which it processes in real-time. It (obviously) also supports offline analysis of stored content.

- Extensible detector architecture: Detectors (analysis components) can be written in C++ and added at will to the framework to extend its capabilities. I implemented several detectors in a hierarchy fashion to find television commercials in a live stream.

- Automatic frame caching: The framework automatically caches video frames and optimises analysis during runtime by also caching various calculations performed on an incoming frame.

- Event store/viewer: Detectors can output 'events' that describe a detected feature in the video, which is automatically stored and managed by the framework. These events can then later be reviewed in an intuitive manner.

- Lots of others: detector timing profiler, signal strength meter, playback rate control, filter graph management, bookmarking, frame dumping, extensive keyboard shortcuts...

Real-time detection of commercials on television

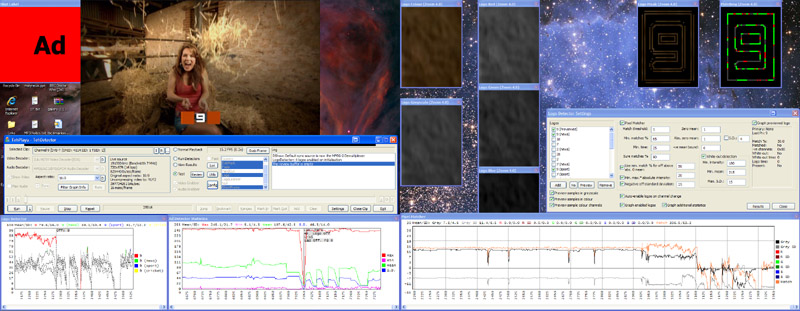

Fri, 04/05/2007 - 12:35 — balintFor my undergraduate honours thesis I conducted research into the unbuffered real-time detection of commercials on television with a view to muting the volume when ads are being broadcast. The research itself dealt with examining the features required to enable robust real-time detection. I developed a sophisticated video analysis and processing framework to underpin experimentation and compilation of results.

The following screenshots show the system running live (click on one to see the full-res image):

TVisionarium Mk II (AKA Project T_Visionarium)

Tue, 01/05/2007 - 16:13 — balintAn inside view into iCinema's Project T_Visionarium:

(We tend to drop the underscore though, so it's referred to as TVisionarium or simply TVis).

This page summarises (for the moment below the video) the contribution I made to TVisionarium Mk II,

an immersive eye-popping stereo 3D interactive 360-degree experience where a user can

search through a vast database of television shows and rearrange their shots in

the virtual space that surrounds them to explore intuitively their semantic similarities and differences.