T_Visionarium (AKA Project TVisionarium Mk II)

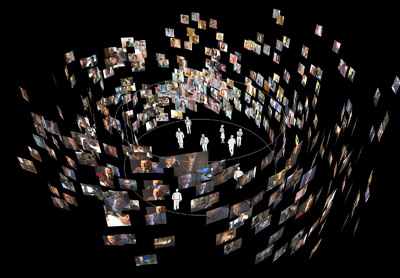

This series of pages summarises the contribution I made to TVisionarium Mk II, an immersive eye-popping stereo 3D interactive 360-degree experience where a user can search through a vast database of television shows and rearrange their shots in the virtual space that surrounds them to explore intuitively their semantic similarities and differences.

It is a research project undertaken by iCinema, The iCinema Centre for Interactive Cinema Research at the University of New South Wales (my former uni) directed by Professor Jeffrey Shaw and Dr Dennis Del Favero. More information about the project itself, Mk I and the infrastructure used, is available online.

I was contracted by iCinema to develop several core system components during an intense one month period before the launch in September of 2006. My responsibilities included writing the distributed MPEG-2 video streaming engine that enables efficient clustered playback of the shots, a distributed communications library, the spatial layout algorithm that positions the shots on the 360-degree screen and various other video processing utilities. The most complex component was the video engine, which I engineered from scratch to meet very demanding requirements (more details are available on the next page).

Luckily I had the pleasure of working alongside some wonderfully talented people: in particular Matt McGinity (3D graphics/VR guru), as well as Jared Berghold, Ardrian Hardjono and Tim Kreger.

My Contribution

I wrote the following major system components:

- 1) A multi-threaded, load balancing MPEG-2 video decoder engine, featuring:

- Automatic memory management & caching

- Level-Of-Detail support and seamless transitions

- Continuous playback or shot looping (given cut information)

- Asynchronous loading and destruction

[Initial tests indicate it can play over 500 videos simultaneously on one computer (with 2 HT CPUs and 1GB of RAM at the lowest LOD). TVisionarium is capable of displaying a couple of hundred videos without any significant degradation in performance, but there's so much still to optimise that I would be surprised if it couldn't handle in excess of 1000.]

With my latest optimisations, TVisionarium is able to play back 1000 shots simultaneously!

While profiling the system, total CPU usage averages around 90-95% on a quad-core render node!

This indicates that those optimisations have drastically minimised lock contention and support far more fluid rendering.

Have a look at TVis in the following video:

This is an in-development 'video tube' test of the video engine:

(Watch it on youtube.com to leave comments/rate it if you like.)

- Asynchronous I/O

- Overlapped I/O

- Smart memory management

- Automatic master/slave/stand-alone configuration

- Automatic reconnection on failure

- Support for remote monitoring of the end application through (yet another) serialisation system I also wrote

- 'SynchroPlay' - the clustered video loading & synchronisation protocol/system to ensure videos would start playing back at the same time

- 'Real-time playback' mode on both the master and slaves to ensure decoded video frames remain in lock-step with each other, despite high computational loads and late frame deliveries

- Video playback 'Simulation mode' on the master node so it can spend its CPU time controlling the renderers instead of worrying about the video frames themselves

- Physics-based modelling of video window layout in the 3D environment using the Open Dynamics Engine

(The following video demonstrates how one half-side of the windows, which are modelled as spheres, arrange themselves around the master window.)

2) (Yet another) distributed communications library:

Although we use an existing system to efficiently send smaller pieces of information to each node in the cluster simultaneously (via UDP), there is a large amount of data that must be transmitted using a guaranteed protocol (ie: TCP). This library boasts:

3) Integrating these components into the actual system running on top of Virtools Dev in a clustered environment, which required me to output some seriously cool code to achieve:

- 4) Various other utilities and apps, eg: DirectShow-based frame extractor, TVisionarium/MPEG-2-based frame extractor, stand-alone MPEG-2 video player that was used as the testing environment for the aforementioned video engine, etc.

Video Behind the Scenes

1000 videos can be seen playing back simultaneously!

This is a preview video produced by iCinema:

This is an older video I made with my handycam:

(Please excuse the poor quality.)

Appearances in TV News

09/01/2007 - SBS World News:

August 2006 - Channel Nine News:

Events

T_Visionarium was officially launched on 08/01/2006 as part of the 2008 Sydney Festival. Please read my blog post about it. Here are some pictures:

The festival banner:

Crowd before the speeches:

The digital maestros (Matt McGinity & I):

In March 2007, I held a private exhibition for my friends (click on the image to see the photo gallery):